A few weeks ago I gave a talk on my current thinking about AI, risk, and progress, so I thought I would rewrite ideas from the talk here. I will do it in a series of short posts, starting with this one.

The core idea of the talk is that underneath much of what is going on is that we are developing a new grammar, a set of interconnected atomic units that allows us to think about the world in different ways. I opened, however, with this quote from physicist John Wheeler:

In any field, find the strangest thing and then explore it.

—John Wheeler

Among the strangest things going on right now is emergent properties in LLMs. This is the observation that as models get larger—however measured, but usually parameters—new capabilities come along unexpectedly and unpredictably. Contrast this with engineered systems, where new capabilities are added deterministically. We are adding Feature X at Time Y, and so on. But that is not how LLMs are created, and not how skills sometimes emerge. They are, in essence, grown, not engineered.

This can be unnerving. When humans brush up against non-deterministic systems whose fluctuations are on human timescales it doesn't always turn out well. For example, people hate gas price fluctuations, mostly because it is one of the few commodities whose price to which people are exposed daily. Up! Down! Up more! As pattern-matching apes, we look for reasons why this might be happening, even if it is mostly noise, most of the time.

One of the byproducts of the organic process via which LLMs are created is that features emerge unpredictably from the process, much like branches from a growing tree, or like buds on a branch. It is not predictable when these will come along, but we accept that they will come.

New features arrive in a similarly non-deterministic fashion in LLMs. They have learned new skills without being explicitly trained on them, from foreign languages, to math, and so on. This is a bit like attending a middle school that only teaches English literature based on Dickens's Bleak House, but coming away with the ability to do basic statistics and pass a doctoral thesis on the Plantagenet period. I mean sure, but ... you weren't trained on that.

These biological aspects of LLMs, and the emergent properties that sometimes result, lead to much confusion. For example, seen in that way, what we call hallucinations—the tendency of LLMs to produce errant information is not an error, properly understood. It is simply the model, having been trained on massive amounts of data, which it has encoded and connected neuronally, answering in the way that seems most probable to it given the statistical distribution it has developed. Demanding that it not do this is like demanding that Roger Rabbit not burst through a wall when Judge Doom taps out "shave and a haircut ..." on a bar wall.

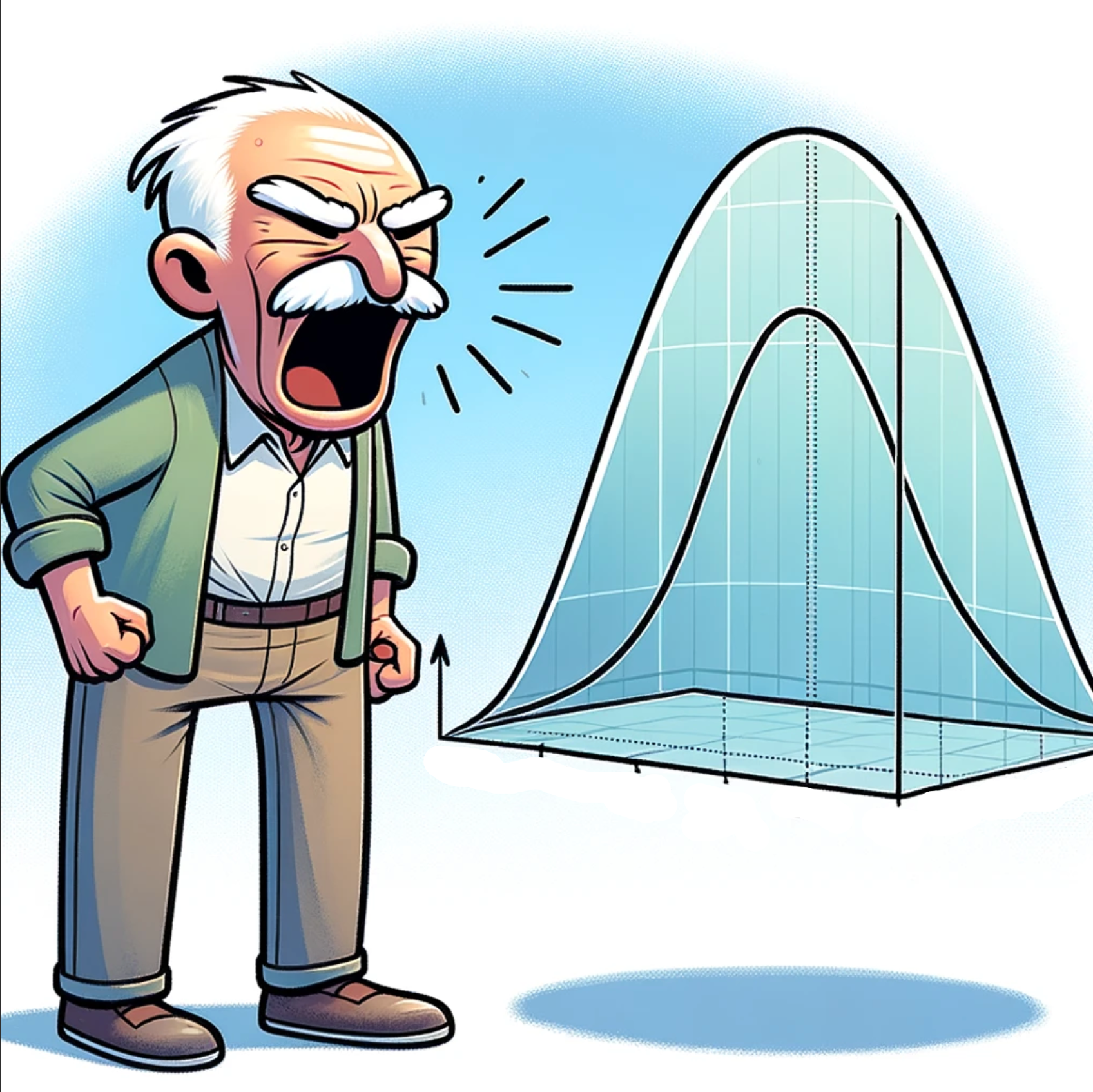

Understood this way, hallucinations are a misunderstanding on our part, not the models'. We have asked the LLM to predict a series of tokens—words, kinda—based on its training set, and, having evolved in this non-deterministic way, and sampling from a multinomial distribution it has developed, it produces what is most likely to come next. That we don't like it, or that we complain about it, is like the classic Simpsons meme, adapted: Old man shouts at multinomial distribution. We are complaining about statistical distributions simply doing what they do.

In the next entry in this series, I dive deeper into some implications of thinking about the world in this way. I work from LLMs to broader implications, steadily developing a Wheeler-esque way of thinking about the emergent universe and its consequences.