the tl;dr

- In Doctor Who: Midnight, an entity mimics and then overcomes the Doctor, flipping roles from follower to controller.

- This mirrors AI progression: from parroting human culture (Phase 1), to tightly mimicking and co-evolving with us (Phase 2), to leading and generating original content humans then emulate (Phase 3).

- The shift to models trained on synthetic data once raised concerns of “model collapse,” but as models surpass humans self-training is not only viable but superior.

- As models take control of language generation, they may begin shaping human culture and mythos directly, risking a loss of human authorship in the foundational narratives of civilization.

In the classic Doctor Who episode Midnight, show-runner Russell T. Davies has the Doctor on a tourist ship across the surface of a planet, and the ship breaks down. The planet's sunlight is lethal, so the Doctor and his fellow travelers must wait for help to arrive, which will take hours. As they are sitting there they suddenly hear a knocking on the outside hull.

There shouldn't, of course, be anyone alive outside to do the knocking. And then stranger things start happening.

First, the ship is rocked with an impact, then something ephemeral moves toward a passenger named Sky, and then she begins cowering. And the knocking continues. The passengers try to determine what's wrong with Sky, at which time she turns and begins imitating what they say to her, and what they say to each other.

DOCTOR: Sky? It's all right, Sky. I just want you to turn around, face me.

(Sky turns slowly and stares into the torchlight.)

DOCTOR: Sky?

SKY: Sky?

DOCTOR: Are you all right?

SKY: Are you all right?

DOCTOR: Are you hurt?

SKY: Are you hurt?

At first, it is strange, then maddening, and then it becomes bizarre, as Sky mimics the Doctor as he recites the first 30 digits or so of pi. They try to engage with Sky, to understand what's going on, or what's gone wrong. But there is no use; she keeps imitating.

Sky zeroes in on the Doctor, imitating his every word, faster and faster. The time gap between what the Doctor says and what she says becomes tiny. Eventually, the mimicry gaps collapse, and the Doctor and Sky are speaking at the same time. She has learned his cadences, style, and phrasing: they are phase-locked. The Doctor and Sky are, verbally, the same person.

But that doesn't last long, but suddenly Sky leaps ahead and speaks first. She is imitating the Doctor, but from the front. He has become her puppet,

JETHRO: She spoke first.

SKY: Oh, look at that. I'm ahead of you.

DOCTOR: Oh, look at that. I'm ahead of you.

HOBBES: Did you see? She spoke before he did. Definitely.

JETHRO: He's copying her.

Having gained control of the Doctor, it now tries to turn things around, blaming the Doctor, in his voice, for everything that has gone wrong. It was him, always him, never the "thing", whatever it was before it gained control.

SKY: It killed the driver.

DOCTOR: It killed the driver.

SKY: And the mechanic.

DOCTOR: And the mechanic.

SKY: And now it wants us.

DOCTOR: And now it wants us.

This Doctor Who episode comes to my mind regularly when thinking about where we are in the current AI frenzy. There are three grand phases.

Phase 1

Large language models (LLMs) were initially seen as parrots, even if stochastic ones. They were trained on our cultural artifacts, from books, to blog posts, to Reddit threads, to YouTube comments. Having learned from those, models could generate such language fluently, to the point that it is often indistinguishable from professional prose, sometimes able to fool AI detection tools. (Spoiler: The text I tried this on was mostly AI generated).

We are already seeing how people are rapidly offloading much of their thinking, purchasing, planning, coding, writing, and more to AIs. This phenomenon has us, charitably, co-evolving with our tools, which isn't historically unusual, even if cognitive offloading at this scale is unprecedented. (There is already a phrase for its dumbing-down effects: "digital dementia".) The human brain is highly energetically expensive, something like 30% of basal metabolic rate, and it cheerfully unloads anything it can.

The river of AI already runs through everything we do. For example, raging torrents of what is often called "AI slop" are flowing, swamping music, video, audio, and text. Some already claim that the Internet itself has already been repopulated, as is postulated in the "dead internet" theory, where most of what you think is human online already ... isn't.

Phase 2

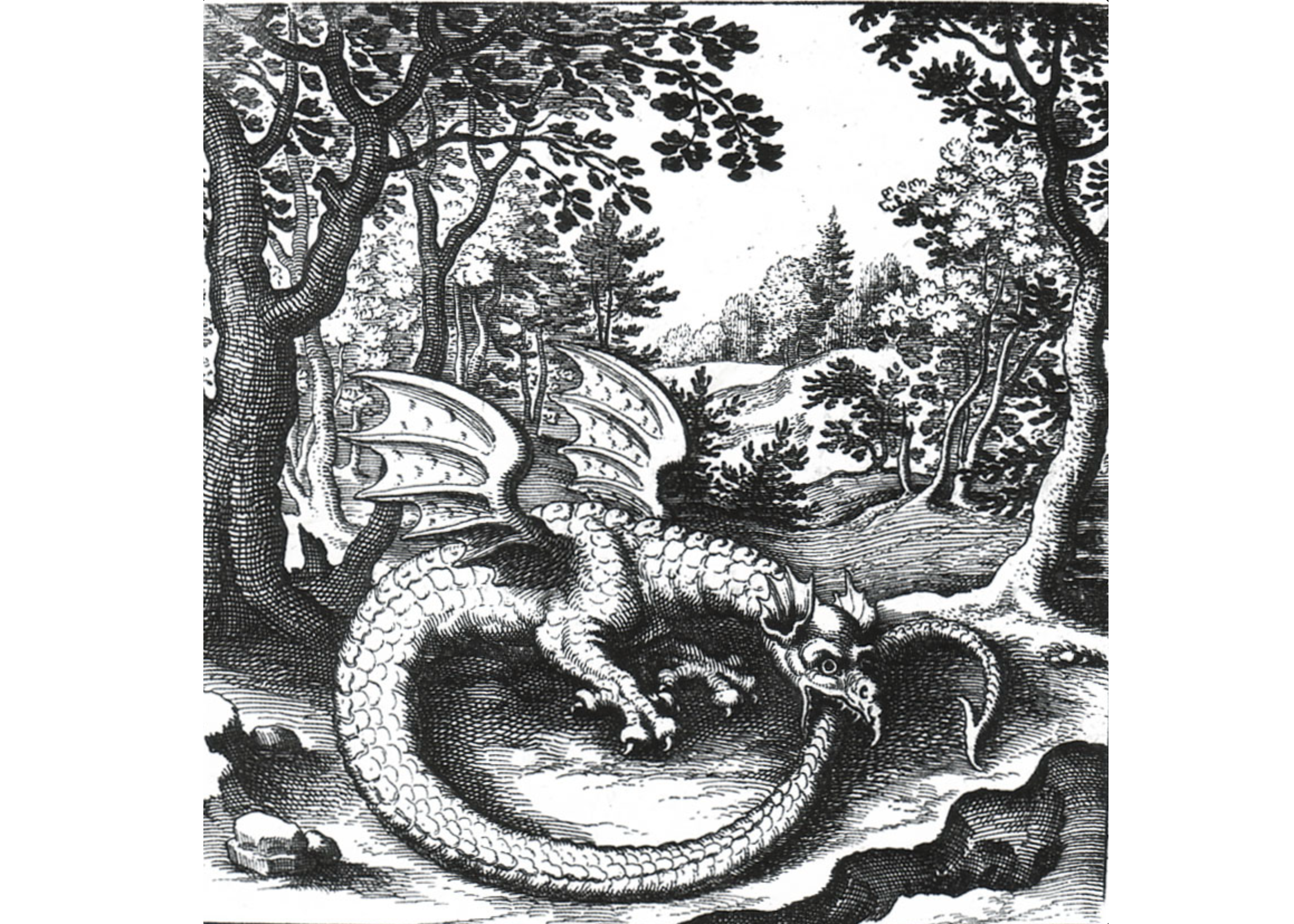

The gap is shrinking, so this is the second phase: rapid and discomfiting mimicry, like Sky. We should expect this to be constant and accelerating, as news stories, memes, and trends become algorithmic triggers for more stories, memes, and trends. Everything will trigger everything in a non-stop loop of interlocking co-evolution, an onanistic ouroboros.

Phase 3a

But it won't stop there. Recall, the third stage in Midnight, after co-evolution, was Sky getting ahead of the Doctor, with him following slavishly behind.

SKY: Oh, look at that. I'm ahead of you.

DOCTOR: Oh, look at that. I'm ahead of you.

There is a coming reversal in the training loop. Models originally learned from people, having consumed vast amounts of online data produced by humans over hundreds of years, finding its way onto the public Internet. And then models and people co-evolved in real-time, as is happening now.

But It will increasingly be the case that models learn from the data they are creating, often called "synthetic data". While some have argued this reliance on synthetic data will lead to model collapse, Google DeepMind's Demis Hassabis will have none of that. In a recent presentation, he off-handedly claimed that relying on synthetic data for video training won't cause model collapse. Why? Because models are rapidly becoming better at video than are humans.

We know there's a lot of worries about this so-called model collapse. I mean, video is just one thing, but in any modality, text as well ... I don't actually see that as a big problem. Eventually, we may have video models that are so good you could put them back into the loop as a source of additional data ... synthetic data, it's called.

—Demis Hassabis, Google DeepMind

Phase 3b

While it is important to computer scientists that models not suffer indigestion and even death during data autocoprophagia (do not look that up before a meal), there is a deeper societal import when models are creating their own data and influencing people and culture.

Coda

As Yuval Harari wrote in his recent Nexus, losing control of language is to lose control of culture, of civilization, of our myths: "Civilizations are born from the marriage of bureaucracy and mythology". We are handing over control of language and of myths to models, not just learning from them, but following them. Like the Doctor in Midnight, we are following models, transitioning from leader to slavish follower. New society-naking myths are ahead, and many won't be ours.