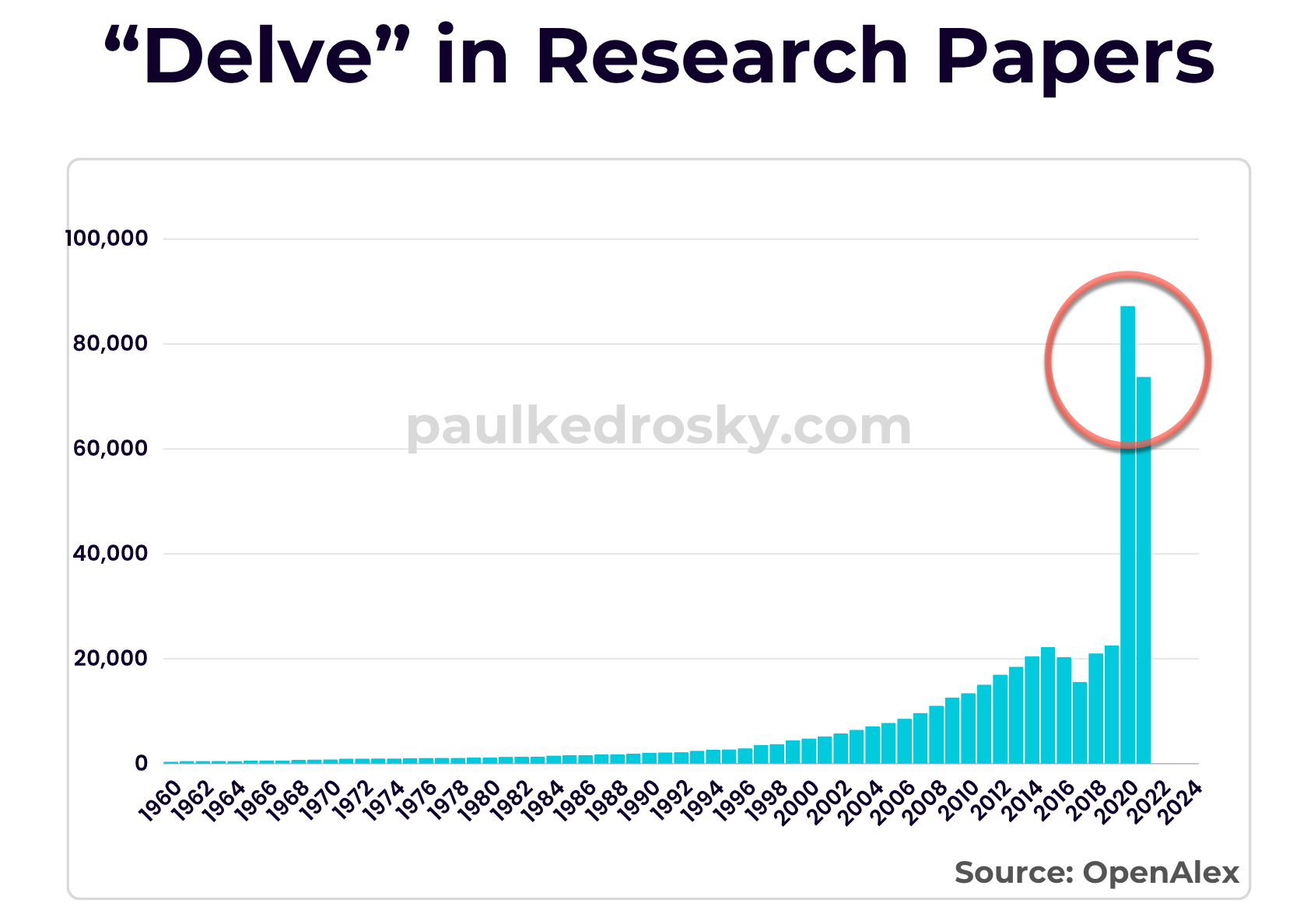

Something strange has happened to the word "delve" in the last two years. Its usage has exploded in everything from Amazon reviews, to undergraduate essays, to academic papers. There were, for example, more papers with the word “delve” in them in 2022 and 2023 together than in the prior 500 years combined. Everyone is on the delve train.

It all has to do with a weird and important quirk of large language models (LLMs), understanding which requires a trip back through the Lord of the Rings, early American settlements, a 17th-century pastor, and Milton.

Initium

In 1636 a little-known English pastor was prosecuted for nonconformity and emigrated to the Massachusetts Bay Colony. A Calvinist, Samuel Newman graduated from Trinity College, Oxford, and took orders from the Church of England. He was a tireless researcher of religious matters, which was likely a factor in his eventual refusal to follow all Anglican tenets.

Despite his research, Newman wasn't an atheist, however. He was a devout Calvinist, and he took his beliefs and research with him to the Colonies. He was initially a preacher at Dorchester, before becoming pastor of the church at Weymouth, where he stayed until 1643. He left that year, taking part of his congregation with him as he helped found a new town in Rehoboth, Massachusetts.

All the while, predating his departure from England, Newman had a secret. He was working on his own Manhattan Project, a textual bomb that he started building in 1630 and that would be released in 1643 and change the world.

Newman's Concordance

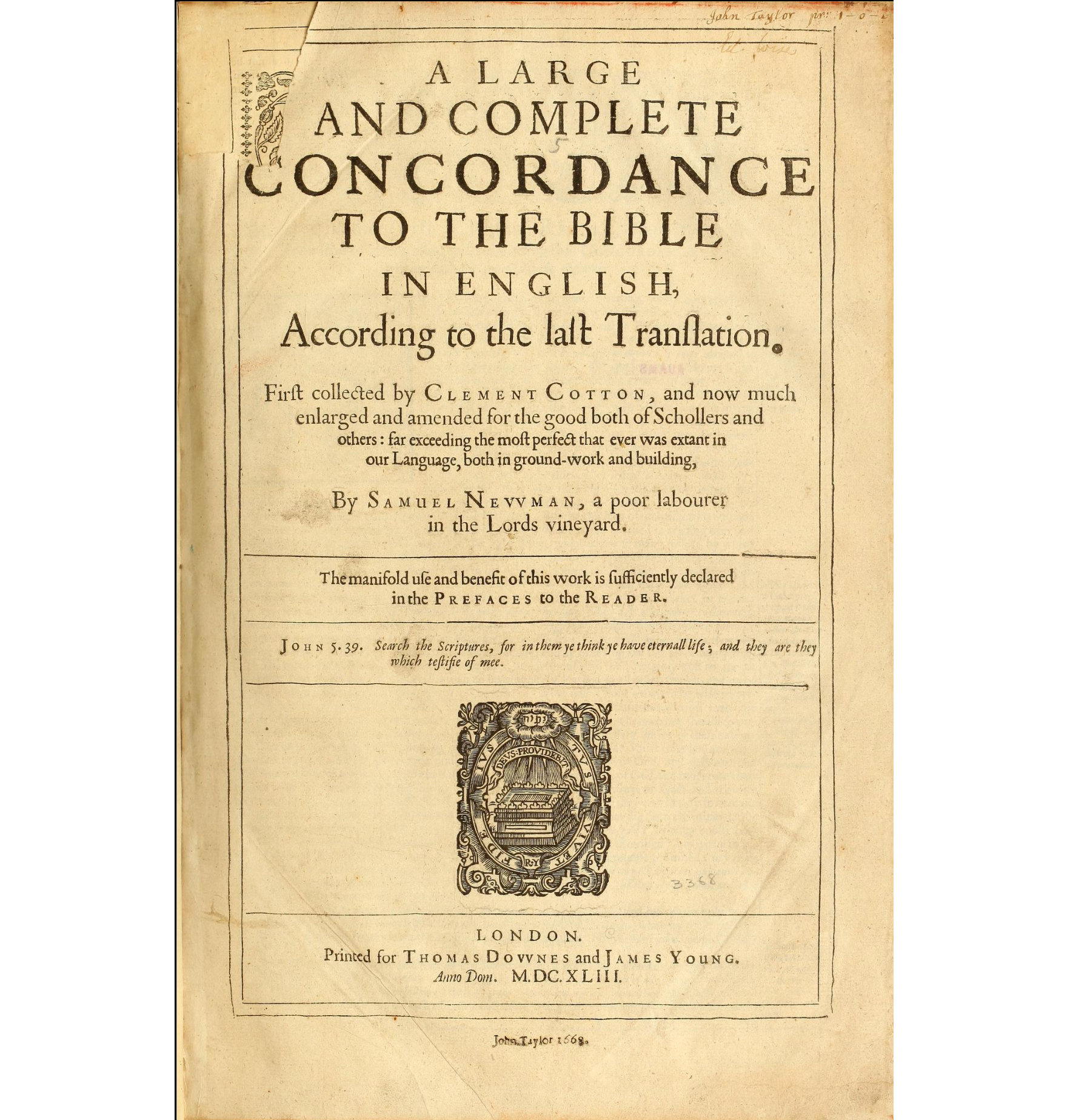

In 1643 Samuel had published in England his Concordance. Its full name: “A LARGE and COMPLETE Concordance to the BIBLE in ENGLISH, According to the Laſt Tranſlation”. Newman built on prior such projects, and in collaboration with Clement Cotton, "for the good both of Schollers and others".

Early books lacked indexes. There was generally no way to search for specific words or passages, forcing people to rely on the knowledge of other researchers, like monks and scholars. As a result, concordances are best thought of as an index+, with topics and keywords tied to page numbers for a particular work. Concordances are a kind of Google for a pre-Google era.

The first concordances long predated Newman's work. The first biblical concordance was for the Vulgate Bible by Hugh of St Cher (d. 1262) who allegedly had 500 friars assisting him. Newman's concordance, mostly his own work, came out hundreds of years later, and was the third English language Biblical concordance. Its main advances were that it was in English, not Latin, and that it was more comprehensive than prior biblical concordances had been.

By the time Newman published his Concordance, biblical scholarship was in turmoil. The Westminster Assembly began the same year, with theologians and clergy working to restructure the Church of England. The goal was modernized church governance and doctrinal standards. As literacy rose and scholarship was democratized, religion was fragmenting and the Church wanted to reinforce control.

Vile Pamphlets, Curious Arts, Profane Stories, and Lascivious Poems

Before going deeper into how Newman's Concordance changed the world, with effects rippling to today's AI models, it helps to get a flavor of Newman's conflicted attitude about his creation. It is not, however, easy reading, with florid passages, frequent call-outs to God, unusual typography ("ſ"s are "s"s), and regular abjurations to take care in using this dangerous new ... tool.

To give you a flavor, here is Newman in the introduction:

What will it boot a man to be wiſe to perdition, and to go to hell by book ? In vain is it to ſpill ſo much precious time as moſt men doe upon vile pamphlets, curious arts, profane ſtories, and laſcivious poems: whereas they ſhould ſequeſter (if God were in all their thoughts) all their ſpare time from the neceſſary duties of their calling to the reading, hearing, and meditating upon the word which through the Spirit of grace, and their devout prayers, will enlighten their underſtanding with the knowledge of God, and enſlame their affections with the love of God, & eſtabliſh their hearts with the promiſes of God, and moderate their joyes with the feare of God, and mitigate their afflictions with the comforts of God, and regulate all their thoughts, words and deeds with the precepts of God.

In short, Newman is saying that research is a fine thing, as long as one keeps it to things about which God would likely approve, like God. Or about God. And, when doing so, avoid being over-curious, over-skeptical, or anything of the like. These are traits, one would think, that helped get Newman himself into trouble, so he knows whereof he cautions.

Newman continues:

Why delve they continually in humane arts and ſecular ſciences, full of dregs and droſſe? why do they not rather dig into the mines of the gold of Ophyr, where every line is a vein of precious truth, every page leaſe gold?

Saying the quiet stuff out loud, Newman argues that people researching non-religious matters—even if via terrific concordances like his, he might add—are not only wasting their time, but likely to be doing evil. They should be mining the gold of Ophyr, he says. What is Ophyr? A mythical land referred to mostly in the Old Testament, where people allegedly stumbled over treasures on a regular basis. Newman's point is that, with a good concordance (see also: his) scholars would find the textual streets paved with gold.

(In an irony, the idea of Ophyr's riches crossed over to California during the Gold Rush days. There is a Mount Ophir, as well as a town of Ophyr, both intended as optimistic auguries of the wealth ahead. It turned out the riches were not in the Sierras but on the coast, a point to which I will return shortly.)

You can sense Newman's unease in this passage. While he has created a valuable tool, he thinks, he also worries about its use by others. It is a foreshadowing of nervousness about future indexing technologies, from Googgle search to AI. This is Newman's early version of "Don't be evil", Google's original motto.

Why Delve They in Humane Arts and ſecular ſciences?

Newman implicitly freights intellectual exploration with danger and risk. While the idea of risky exploration had been around forever, longer than legends like Icarus, the intellectual risk associated with over-curiosity twinned with tools to accelerate that curiosity was a new and modern idea.

How new? It was so new that Newman's use of the word "delve" in this context was, according to the OED, the first example of the word being used outside its origins as a synonym for digging.

As existing words gain new meanings they are deemed increasingly polysemous. The process by which they gain those added meanings is called "semantic expansion", and it happens through usage. Newman has done that here: he created a new, allusive meaning for the 9th-century word "delve", by using it in his cautious way about people delving into useless matters, i.e., matters not having to do with the Bible.

Semantic expansion happens all the time, of course. Words are viruses, living, dying, breeding, and absorbing other linguistic genetic material in their quest to go on living, even if words themselves have no internal energetic force. Definitions can pile on definitions, as is the case with "run", which has, according to the OED, a staggering 645 meanings.

Digging and its Consequences

Delve is only moderately polysemous, with 11 definitions, according to the OED. The word itself, with its original digging usage, dates to King Alfred’s translation of Boethius’s Consolations of Philosophy. Alfred completed the translation while he was King of Wessex, perhaps in the late 880s or early 890s, in an early Great Books exercise, trying to elevate the locals. It took nearly six hundred years for the word to acquire, via Newman, the allusive meaning of investigating something.

This idea of "danger below" was not unique to Newman, of course. It can be traced back to Milton’s Paradise Lost, Beowulf, and early fairy tales. Monsters, dangers, and even sometimes hell could be found in deep enough holes, making digging too deeply a predictable path to bad outcomes.

Over time delve has become so casually freighted with consequences that it has been anointed with the tvtropes.com status of “dug too deep” when something goes predictably wrong because people messed around with holes.

The canonical version of this was the dwarves in Tolkien’s Lord of the Rings, who were forever digging too deeply in all the wrong places. This eventually led to the monstrous Balrog being found when the Dwarves of Moria "delved too greedily and too deep”. They fucked around (with delving) and found out. And, according to Gandalf, there are worse things than Balrogs further down, which puts limits on one’s prudent delving.

In these modern usages delve captures both of its evolving meanings, that of digging into the earth (from King Alfred), and that of investigating with risks (from Newman). The combined act touched something deep within humans, both as investigation and as danger. Using "delve" in this exploratory context also feels vaguely intellectual, which gives it a sort of Look Me, Smart halo, and that helps.

Delve Has Entered the LLM Chat

As I wrote at the beginning of this essay, something weird has happened to delve in recent years. Its usage has exploded, especially in academic papers. Check the following graph from OpenAlex to see what has happened to delve in the last fifty years. It is … remarkable. There were more papers with the word “delve” in them in 2022 and 2023 together than in the prior 500 years combined.

What has happened? ChatGPT, of course. As many will know, large language models have predictable textual twitches, and throat-clearing words appear regularly in large language model-generated text. High among them? The word “delve”: it is a Great Filter for AI vs non-AI text.

Why it’s there, however, is a trickier question. In a sense, delve's journey from physical excavation to metaphorical exploration mirrors human intellectual progress, from the physical to the digital and intellectual.

Its current overuse in LLM-generated text reveals a related phenomenon. The term's multi-layered meanings, coupled with its slightly elevated rhetorical tone, make it an attractive choice for AI systems trying to sound authoritative and academic. In this way the frequent appearance of 'delve' in AI writing serves as a linguistic marker, a reminder of LLMs' tendency to default to words that imply depth and thoroughness, often at the expense of more varied and precise language choices.

In plain language, putting “delve” into responses is the model trying to sound smarter than it is. But models are wrong. Delve-drops all over one’s text are not a signifier of intelligence so much as a signal that one has used an LLM to generate the text.

No-one Really Knows Anyone, Not Really

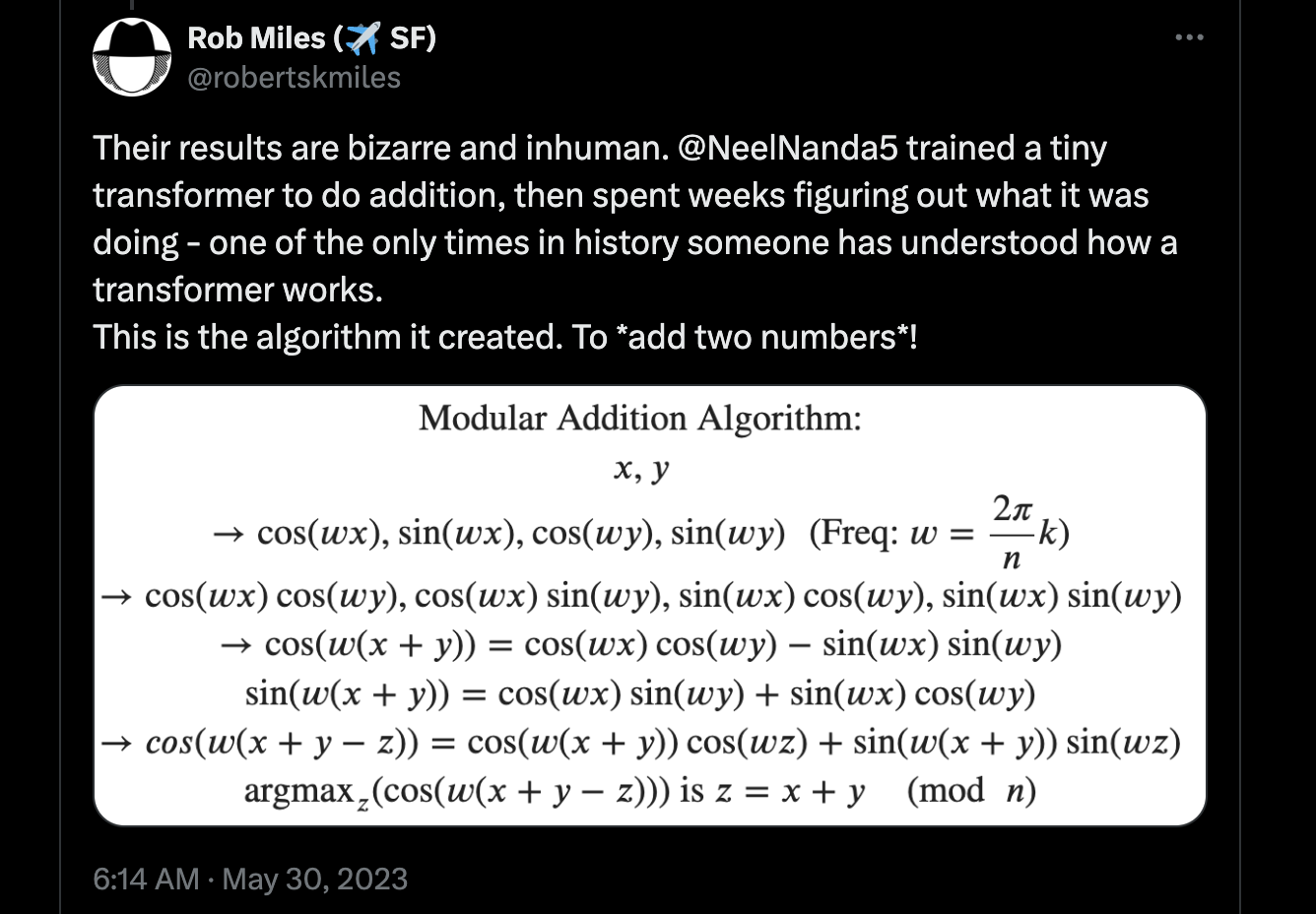

Can we be sure this is what is going on? No, given the difficulty of forcing models to explain their weights and choices. And they can seem otherworldly in that regard.

A year or so ago someone trained a transformer model to do basic math, and then analyzed how it learned to do so. The result is like peeking into an alien’s brain, one that has derived basic principles of mathematics by observing precocious toddlers stacking rocks in bus shelters. I mean, I guess you could get to Whitehead and Russell's from there, but I wouldn’t count on it making any sense to anyone—which this doesn’t.

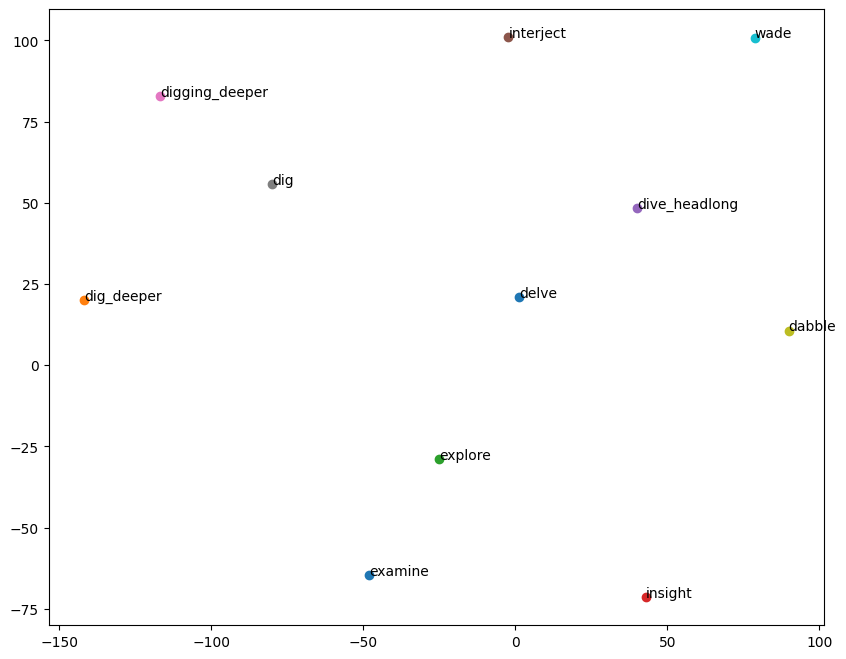

We can do something similar in large language models, at least in basic terms. We can ask the model to tell us the words most similar to "delve", in their encodings, but that aren't the word or a derivative.

Imagine Word2Vec as a sophisticated library where each word has a unique position on a 300-dimensional map, based on its meaning and context. First, I loaded this library and asked it to show the closest "neighbors" to "delve" on this map. I first filtered out any neighbors that have the substring "delve" in them, sort of like, in map terms, excluding nearby restaurants with similar names.

I then took those neighboring words and converted their high-dimensional coordinates into a simpler, 2-dimensional map using a technique called t-SNE. Think of t-SNE as a tool that flattens a crumpled-up paper map into a 2D version while preserving the relative distances between locations.

Finally, I plotted these points on a graph, with each point representing a word, and labeled them accordingly. This visual representation helps you see how "delve" and its similar words are positioned relative to each other in a simplified space, making it easier to understand their semantic relationships.

Here are the words most similar to "delve", from an LLM's point of view, based on a widely-used data set of Google News.

| Most Similar Words |

|---|

| dig_deeper |

| explore |

| insight |

| dive_headlong |

| interject |

| digging_deeper |

| dig |

| dabble |

| wade |

| examine |

Now here is now the model "thinks" about their position in space, in terms of their distance from "delve". You can get a sense of how it assesses words like "explore" and "examine" as being sufficiently semantically similar that it can swap "delve" in for them.

Finis

We cannot know exactly why models want to freight so much text they generate with the word “delve”. Yes, there is an inkling from forcing models to show their inner workings and the words that they deem similar, as I have done above.

But, as we have learned, there are deep waters here, our conflicted relationship with technology, our demand for certainty, and our confusion about LLMs do, and how they do it. All of these are in the mix.

What do we know, however, given the history of "delve", and its complex and eschatological etymology, that is a more intriguing choice than it might appear at first glance. Delving into something is a grandiloquent cliché and a quest for implied certainty.

But it is also a cultural signifier, one with a thousand years of history at the intersection of religion, politics, science, risk, and literature, and one that is now being reflected back to us. Models are channeling all that history, in which is embedded our uneasy relationship with risky technologies. Delve is speaking to us in our own words as it changes the world, if we will listen.