A few things at the intersections of complexity, finance, and health. Today's topics:

- Utah's dirty snow from dust blown off dried lakebeds is causing earlier snowmelt

- Financial AI relies heavily on ingesting news, which is, in turn, increasingly written by AI

- Obesity drugs may threaten a homeostasis effect where diets gets worse

- Fossil fuel companies insist that more clean energy means more fossil fuels

01. Utah's Dirty Snow

A hallmark of complexity and coupled systems is unexpected and accelerating feedbacks. We see that in action in Utah, with the Great Salt Lake (GSL) reaching record low levels in 2021 and 2022, and that in turn has led more of the dried lakebed being exposed. Said lakebed becomes dust, which blows around, landing on snow in the nearby Wasatch Mountains, which darkness it, decreasing its albedo, which causes it to melt sooner. The winter of 2021/2022 had the highest dust-on-snow (DOS) concentrations in the data series' history, which sharply increased the amount of snowmelt.

Key points:

- Dust emitted from the dry lakebed of Utah's Great Salt Lake (GSL) contributed 23% of total dust deposition on the adjacent Wasatch snowpack during the 2022 snowmelt season.

- The impact of dust on snowmelt, quantified through mass and energy balance modeling, resulted in snowmelt occurring over 2 weeks (17 days) earlier than without dust darkening.

- The timing and extent of dust-accelerated snowmelt would have been even worse if the spring had been drier, but frequent snowfall covered dust layers, delaying the accelerated melting.

- The 2022 season had both the highest dust concentrations and the most dust deposition events since 2009.

Source: The shrinking Great Salt Lake contributes to record high dust-on-snow deposition in the Wasatch Mountains during the 2022 snowmelt season https://iopscience.iop.org/article/10.1088/1748-9326/acd409

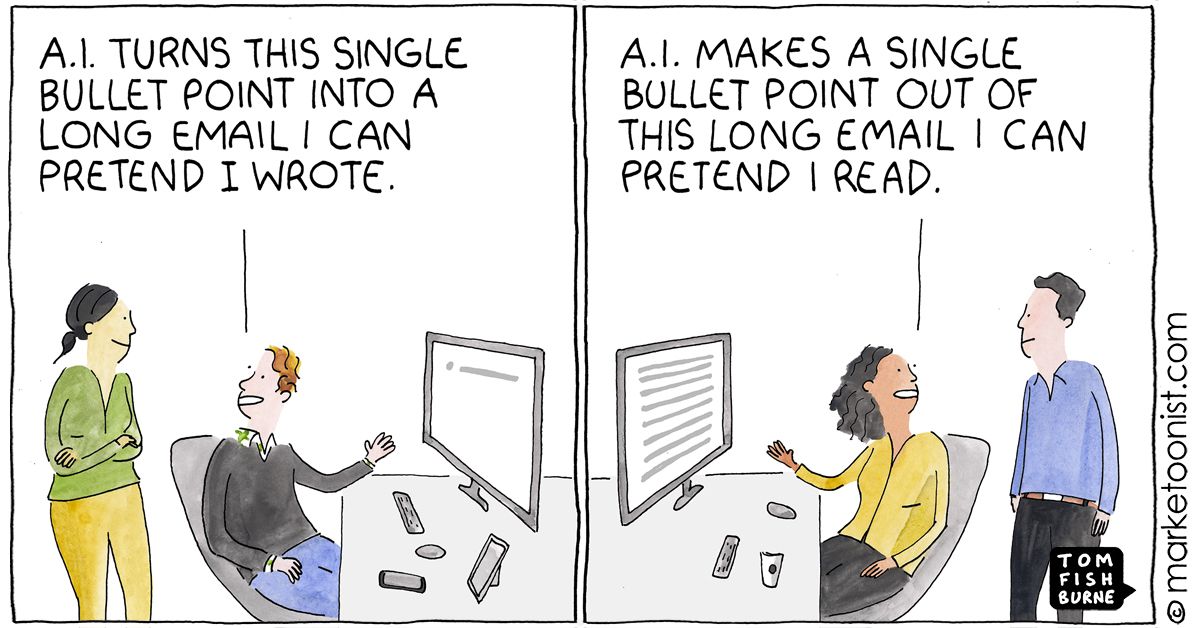

02. AI Ingests Itself

Machine learning algorithms in finance have long relied heavily on ingesting news, trying to find tradable patterns and signals. In the most recent JPM survey of large investors, more than half of them use such algorithms, and the single largest source of content remains journalism. At the same time, the survey says that most investors believe that a large fraction of future journalism will be written by AI.

This seems likely to lead to feedback effects. If stories are written by AIs, and then ingested by pattern- and signal-seeking AIs, many of which follow similar cues—sentiment, novelty, surprise, and so on—we should expect even more volatility in future given that more algorithms will be responding to the same signals, given news engines using similar methodologies producing what we used to call news. This will be risk-increasing and alpha-decreasing.